Friday, 22nd November 2024

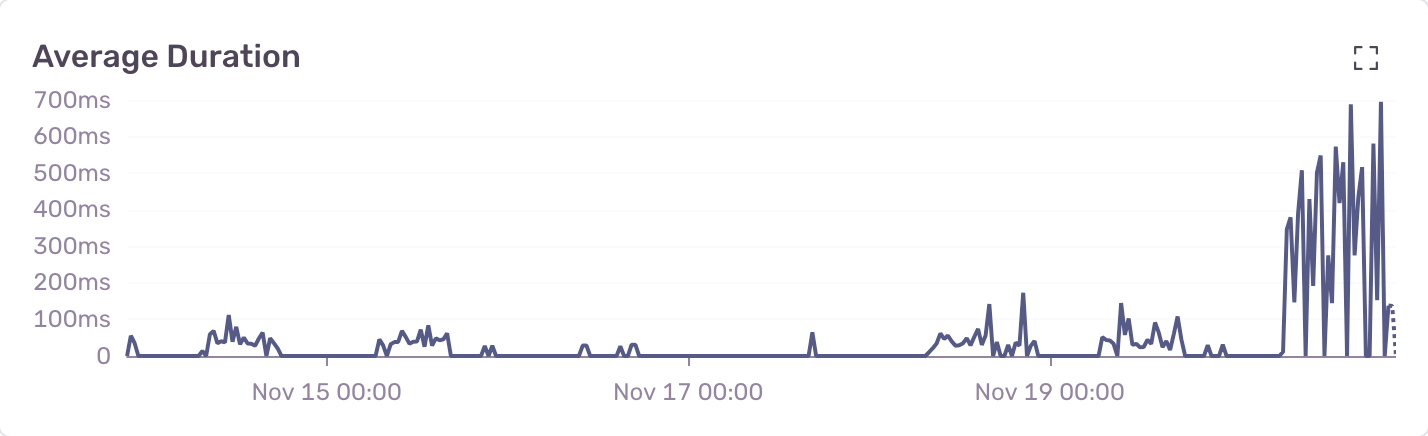

Upgrading your database should bring improvements, but sometimes, it introduces unexpected bottlenecks. Recently, we upgraded PostgreSQL from version 16 to 17 on Google Cloud SQL. The next day, a critical issue surfaced: several queries were performing 7x slower, severely impacting user experience.

The Problem

We noticed the slowdown early—around 7:30 AM, right as our users began their day. Affected queries that typically completed in 200ms were now taking over 1.4 seconds on average. Local tests, however, showed no such issues. The discrepancy pointed squarely at the production database.

At first, we suspected a specific query might be at fault. However, as we investigated, it became clear that this wasn’t an isolated case. The slowdown was affecting a wide range of queries.

We began with basic performance tuning: monitoring resource usage, analysing query plans with EXPLAIN ANALYZE, and reviewing our database configuration flags. A database restart provided temporary relief, but the slowdown returned almost immediately. Checking the Postgres 17 upgrade notes revealed no smoking gun, and our tests of Postgres 17 had gone smoothly in non-production environments.

What fixed it

Before proceeding, schedule a maintenance window or low-traffic period, as these operations may briefly impact availability. Testing on a database clone can help estimate duration.

Attempt 1: Reindexing the database

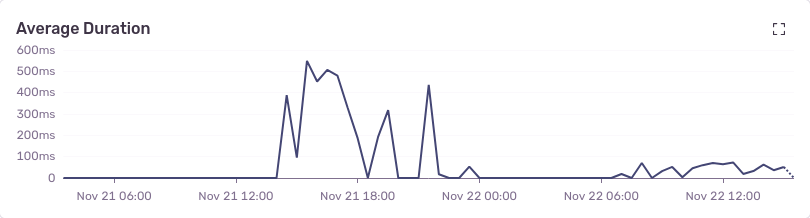

We started by reindexing everything, this brought immediate improvements, but only temporarily.

By mid-day, the next-day, performance had started to degrade again under heavier load.

It was clear reindexing alone wasn’t the full solution.

langpostgresql

REINDEX DATABASE database_name;

Attempt 2: Vacuum analyze

This step updates the database’s internal statistics, which the query planner uses to make decisions.

The result? A dramatic improvement.

Queries returned to their normal speed, and the query plans now looked much more like what we’d expect.

langpostgresql

VACUUM ANALYZE;

Why vacuum analyze worked

The root of the issue was likely corrupted or outdated planner statistics after the upgrade. PostgreSQL uses these statistics to estimate the cost of query execution and choose the most efficient plan. When we compared the EXPLAIN results for the same query on production and locally, we noticed stark differences. In production, the planner estimated very low costs (130-155), but the query took over 300ms to run—far slower than expected. Locally, the same query executed much faster with more realistic cost estimations.

This discrepancy indicated that the production database's query planner had incorrect or incomplete information, leading it to choose inefficient plans. Running VACUUM ANALYZE updated these statistics, aligning the planner’s estimations with reality and enabling it to generate more optimal query plans. The performance improvement was immediate, with queries returning to their expected speed.

After implementing both changes our query duration was sufficiently reduced:

Lessons learned

- Running

VACUUM ANALYZEshould be a standard part of any major PostgreSQL upgrade process. Don’t assume the database will handle this for you. - Comparing query plans between environments can reveal why performance differs and point you toward the root cause.

- Our first fix—reindexing—helped temporarily, but the real solution required digging deeper into how PostgreSQL was interpreting the data.